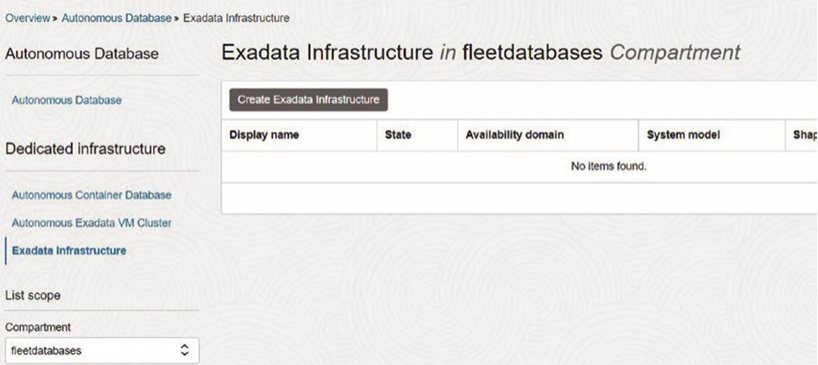

The ADB Dedicated architecture consists of the following:

• Exadata infrastructure

• Autonomous VM cluster

• Autonomous Container Database

• Autonomous Database

The Exadata infrastructure can be in an Oracle Cloud Infrastructure (OCI) region or can be Exadata Cloud@Customer (so in your data center). This will include the compute nodes, storage, and networking.

The VM cluster is the set of virtual machines set up for the Autonomous Container Databases to run on, and it provides high availability with all of the nodes. The VMs will be allocated all of the resources of the Exadata infrastructure.

The Autonomous Container Database is the CDB that will be set up to manage the pluggable databases. Autonomous Databases are pluggable databases that can be configured to be transactional or configured for data warehouse workloads.

Provisioning will include all of these components. Fleet administrators will need the right policies and permissions at the cloud tenancy level and then need system DBA permissions to create CDBs and PDBs. The architecture should use a compartment in the tenancy to properly allocate resources and policies at the right level. In the OCI tenancy, create a compartment for the users, VMs, databases, and other resources.

Policies

After creating a compartment in the tenancy, let’s call it fleetdatabases in our examples, a group should be created to manage the fleet administrators, fleetDBA. The policies and users should be added to the group.

Here is a list of policies that can be manually edited in the OCI console under Policies for the compartment:

Allow group fleetDBA to manage cloud-exadata-infrastructures in compartment fleetdatabases

Allow group fleetDBA to manage autonomous-database-family in compartment fleetdatabases

Allow group fleetDBA to use virtual-network-family in compartment fleetdatabases

Allow group fleetDBA to use tag-namespaces in compartment fleetdatabases Allow group fleetDBA to use tag-defaults in compartment fleetdatabase

Note Compartments, groups, and policies are all created through the oCi console. if you want to automate this process and use either the command line or scripts, there are ways to do this through creating terraform scripts for consistent provisioning in the environments.

Fleet administrators can either have permission to give database users the permissions for Autonomous Databases or have the policies created for the database user groups. These would be users managing and using the Autonomous Databases in their own compartment in the tenancy.

Policies can depend on the environment and what the users are allowed to do. There might be additional policies that would be allowed, or different users might be allowed only certain policies. Here are some additional examples:

Allow group ADBusers to manage autonomous-databases in compartment ADBuserscompartment

Allow group ADBusers to manage autonomous-backups in compartment ADBuserscompartment

Allow group ADBusers to use virtual-network-family in compartment ADBuserscompartment

Allow group ADBusers to manage instance-family in compartment ADBuserscompartment

Allow group ADBusers to manage buckets in compartment ADBuserscompartment

Users can be added to the groups with the policies for permissions. The fleet administrators would be in the fleetDBA group, and those just using ADBs would be in the ADBusers group.